I’ve had the pleasure to present the zio-prefetcher micro-library at zio-world 2022. Below is a quick summary, here are the slides and there’s the video (or watch it below).

A Street-fighting Trick for Software Engineers

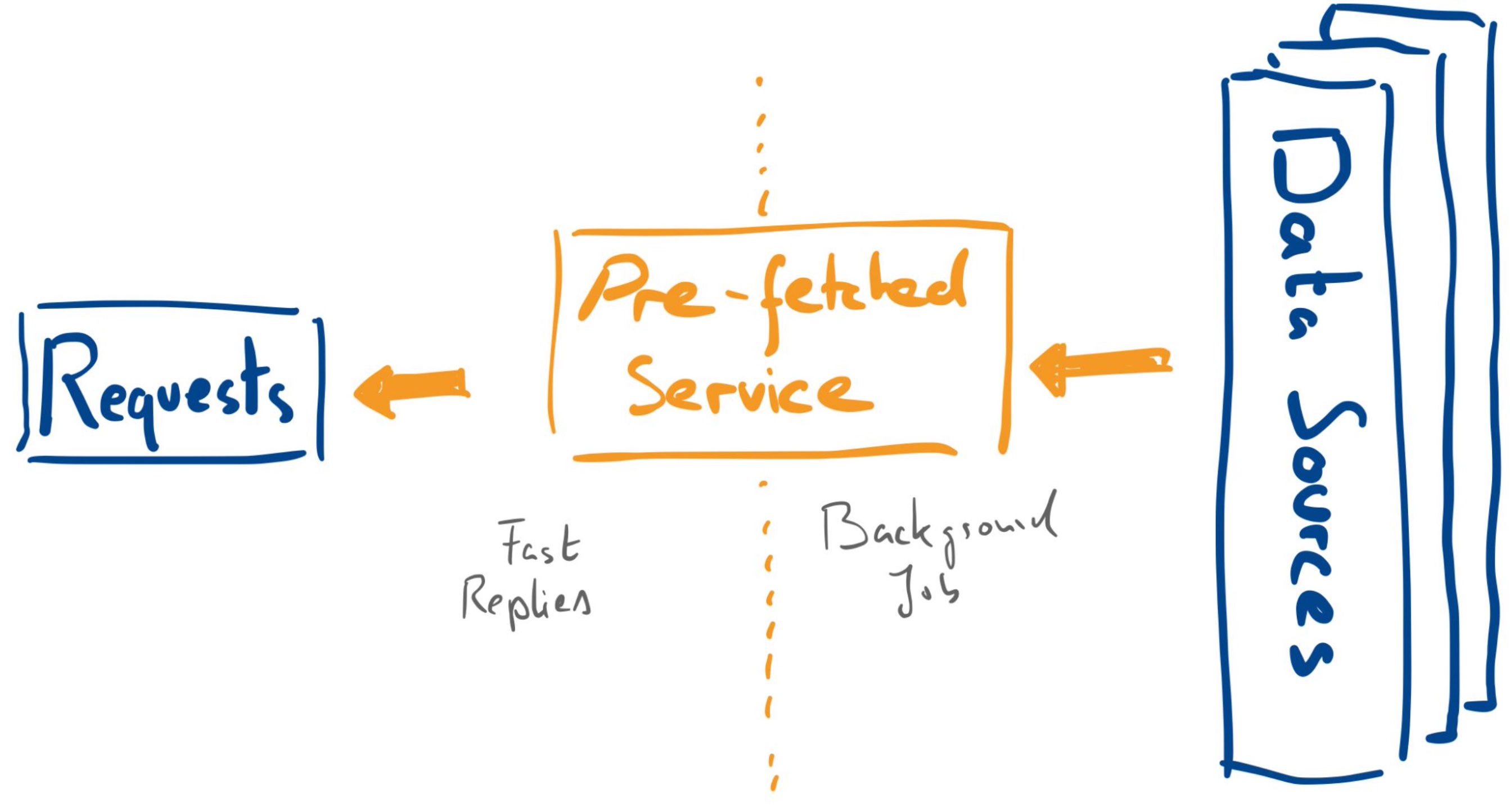

While there are a few reasons to adopt pre-fetching, such as slow databases and unreliable third-party systems, the core argument of the presentation boils down to:

RAM is seriously undervalued as a place of temporary storage

Add to it the fact that we have a tendency to overly wrang our hands around the topic of consistency in the services we build, and we have everything we need for this slightly provocative proposition:

Why strike a delicate trade-off with availability and performance when you can drop consistency altogether?

There are many situations where displaying something quickly trumps displaying it either slowly or not at all even at the price of (slight) imprecision. Especially so if increasing consistency comes at non-trivial costs.

Viability Above (Absolute) Correctness

The second point of the talk is about the potential increase in development speed you can get out of accepting, even temporarily, some little quirks in your architecture1: if the survival of a project is contingent on building a first POC that can deliver value, you’d better focus on delivering that value ASAP rather than being annoyed with peculiar consistency questions that will be irrelevant anyway if the project is binned.

For, interestingly, it is generally easier to get a couple more gigagbytes of RAM allocated to the hardware that will run your services than spawning additional services such as a distributed cache2: this is an interesting fact to keep in mind while you polish the v0.1 of whatever it is you are building.

Benefits

Summing up the benefits ot the pre-fetcher approach, we have:

- (Predictable) Performance: fast if it’s there, fast if it’s not! In other words, get rid of annoying tail latencies because there will simply not be anything such as a slow or timing out database query to serve a request.

- Availability: data source can happily bail out for a while! It’s OK as you have a copy in memory.

- Internal Consistency: a carefully built pre-fetched state can remain self-consistent and will not suffer from inconsistencies introduced by multiple caches3. This can make it easier to reason about your system.

- Easy to purge pre-fetched data – no need to deal with invalidating external systems: just restart your service.

Additionnally, as touched on earlier, you get some benefits that are interesting to a project manager or the product owner:

- Speed Premium: limited amount of time to try things out? Get the POC out the doors faster, increase the chances of success.

- Unlock new use cases by experimenting more.

For the downsides, which are pretty obvious, check-out the slides.

Not Overdoing it

Obviously, using one or multiple pre-fetchers won’t solve all of your slow dependencies: only the ones that are just too slow to make your service snappy will be easily hidden this way, while anything that clearly requires a long-running background job (say, when you start reasoning in dozens of minutes or hours) naturally remains off-limits, lest your service’s initialisation time will explode.

There are other interesting ways in which this library might turn into a foot-gun for you, though: the streaming version will let you compose pre-fetchers together to build new ones. At this point all bets are off.

Conclusion

Pre-fetching is a very simple concept: for lack of a wider audience and the fact that it can easily seem too dirty to most tastes, you end with a powerfull tool that tends to be under-used. Thus, it’s one of my favourite street-fighting tricks for software engineers that I recommend.

I’d encourage everyone to toy with such ideas and concepts for time to time, even if you don’t end up using them in the end: it may also improve the overall design of your systems.

If you’re wondering about how the concept of pre-fetcher came to be, here’s the background story.

-

This clearly relates to the notion of technical debt. Remember that debt (technical or othwerwise) is a powerful instrument that can also ruin you: use responsibly. ↩︎

-

Provided said services have never been deployed before in your organisation: note that introducing them might well be a good idea overall, but that might go beyond the scope of whatever you are working with. ↩︎

-

Say, a request needs multiples things from the database, some of which have been cached: there is an opportunity for inconsistency where you mix stale data with the fresh one. ↩︎